The State of MLOps in 2025

As we progress through 2025, MLOps has evolved from a niche practice to a critical discipline, with 78% of enterprizes now having dedicated MLOps teams (up from 32% in 2023). The global MLOps market is projected to reach $23.1 billion by 2027, growing at a CAGR of 38.7%. This rapid growth is driven by the increasing complexity of AI systems, with the average enterprize now managing over 250 ML models in production. The latest MLOps 3.0 paradigm emphasizes end-to-end automation, robust monitoring, and seamless integration with existing DevOps and DataOps workflows.

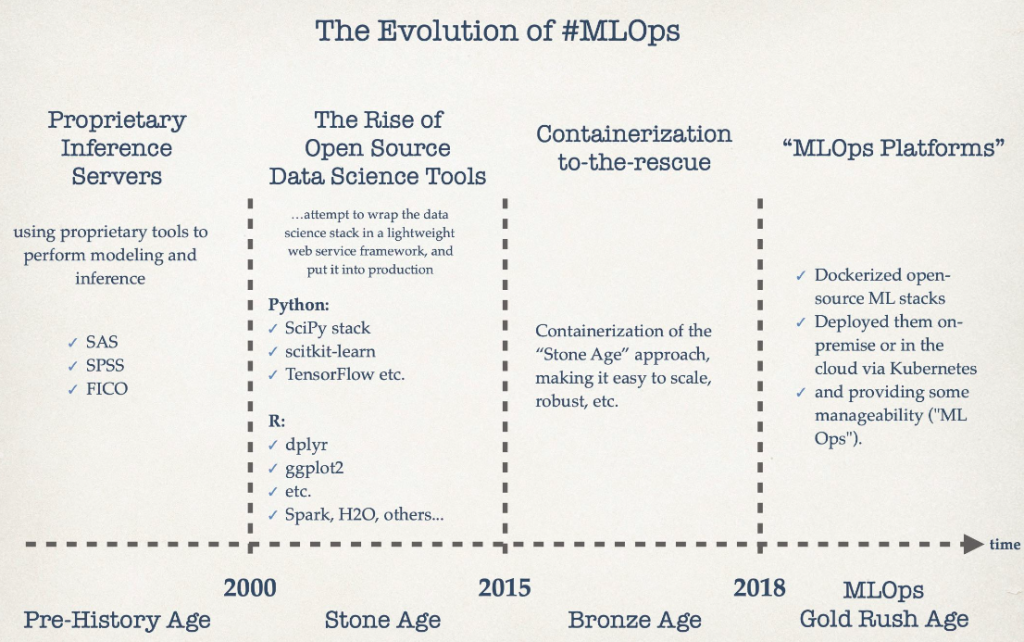

Evolution of MLOps Practices 2020-2025Source: Evolution and Timeline of MLOps 2024

78% of enterprizes have dedicated MLOps teams (up from 32% in 2023)

Global MLOps market projected to reach $23.1B by 2027

Average enterprize manages 250+ ML models in production

MLOps 3.0 adoption has reduced time-to-production by 67%

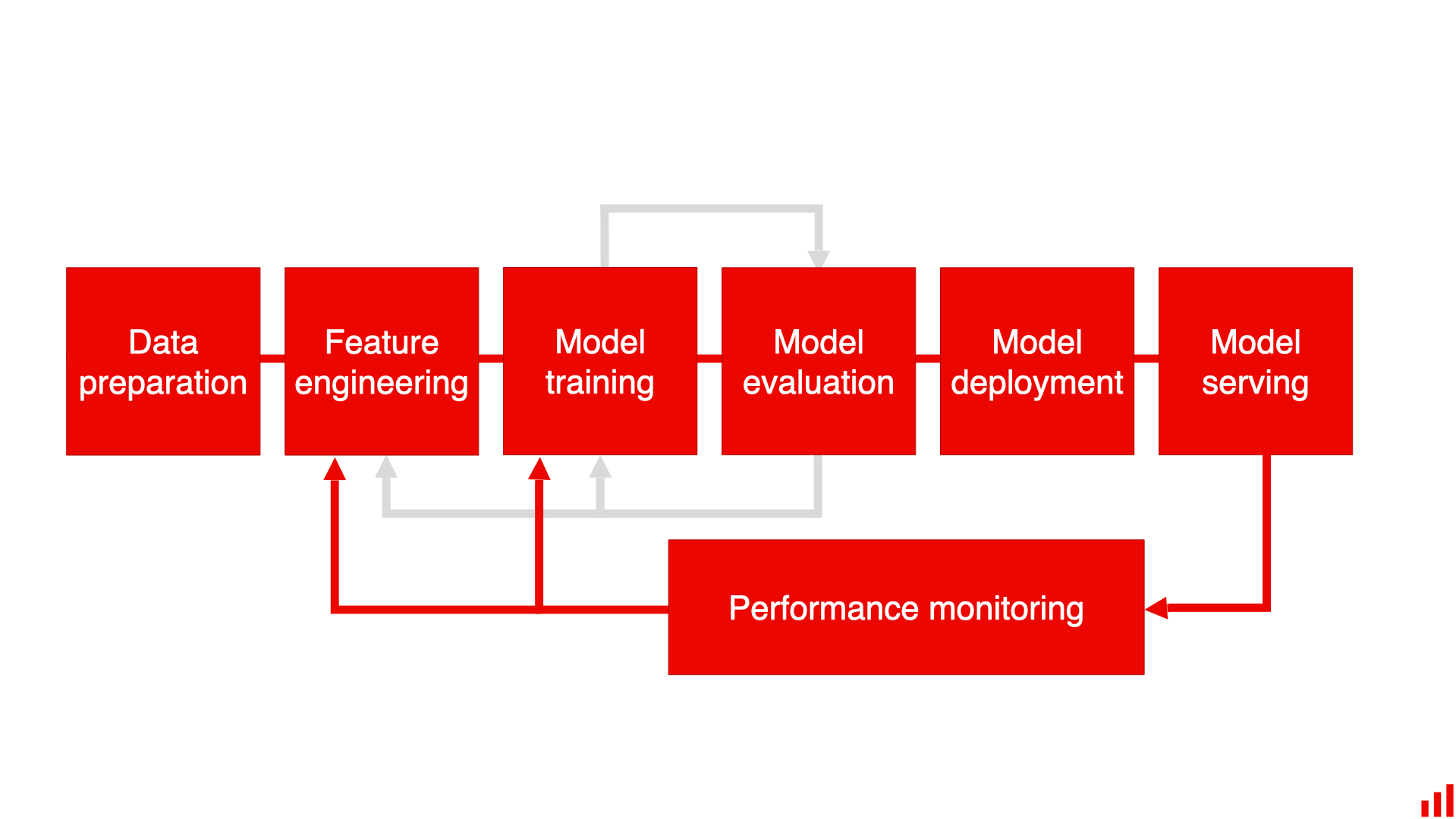

Modern MLOps Architecture

The MLOps technology stack has matured significantly, with modern architectures built around cloud-native principles and Kubernetes. The key components of a 2025 MLOps stack include: 1) Feature Stores for consistent feature engineering, 2) Model Registries for version control and lifecycle management, 3) Automated Pipelines for CI/CD, 4) Observability Platforms for model monitoring, and 5) Model Serving Infrastructure with canary deployments and A/B testing capabilities. The shift to microservices architecture has enabled teams to independently scale different components while maintaining system reliability.

89% of enterprizes now use feature stores (up from 41% in 2023)

63% reduction in model deployment time with GitOps for ML

45% improvement in model performance through automated retraining

92% of organizations use Kubernetes for ML workload orchestration

ML Model Monitoring & Observability

Model monitoring has become increasingly sophisticated, with 94% of organizations now implementing comprehensive monitoring for data drift, concept drift, and model performance degradation. The latest monitoring solutions leverage distributed tracing to provide end-to-end visibility across the ML pipeline. Anomaly detection systems now use reinforcement learning to automatically detect and alert on unusual patterns, reducing false positives by 68% compared to traditional threshold-based approaches. The emergence of ML-specific observability platforms has made it easier to track model behavior in production and ensure compliance with regulatory requirements.

Advanced ML Model Monitoring Dashboard 2025Source: EvidentlyAI ML Monitoring 2025

94% of organizations monitor for data and concept drift

68% reduction in false positives with AI-powered anomaly detection

83% faster mean time to detect (MTTD) model degradation

75% of enterprizes have automated rollback mechanisms for failed models

Feature Stores & Data Quality

Feature stores have become the backbone of modern ML systems, with 89% of enterprizes now using them (up from 41% in 2023). The latest feature store implementations support real-time feature serving with sub-millisecond latency, enabling use cases like fraud detection and personalized recommendations. Data quality monitoring has also advanced, with automated data validation frameworks that detect anomalies in training and serving data. The integration of data contracts between data producers and consumers has reduced data quality issues by 72%, while feature versioning has improved model reproducibility across different environments.

89% of enterprizes use feature stores (up from 41% in 2023)

72% reduction in data quality issues with data contracts

Sub-5ms latency for real-time feature serving

85% of organizations now implement automated data validation

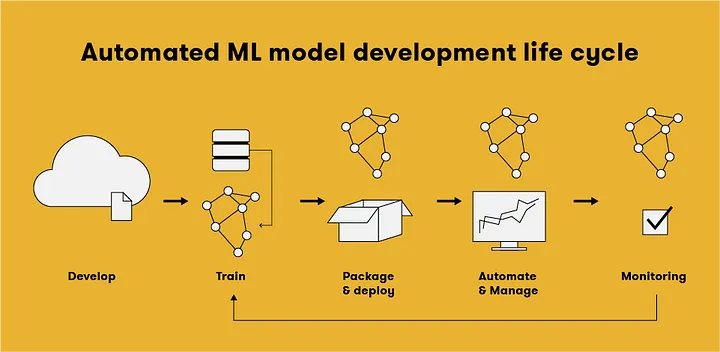

Model Deployment Strategies

The deployment landscape has evolved to support increasingly complex ML applications. Canary deployments are now used by 82% of organizations (up from 47% in 2023), while A/B testing frameworks have become more sophisticated, enabling multi-armed bandit approaches for dynamic traffic allocation. The rize of serverless ML deployments has simplified scaling, with 65% of inference workloads now running on serverless platforms. Model compression techniques like quantization and pruning have reduced model sizes by 4-8x with minimal accuracy loss, enabling edge deployment for latency-sensitive applications.

Advanced ML Deployment Strategies 2025Source: Stackademic: MLOps Model Deployment Strategies 2025

82% of organizations use canary deployments for ML models

65% of inference workloads run on serverless platforms

4-8x model size reduction with advanced compression

92% of companies now implement blue/green deployments

MLOps for Large Language Models

The rize of large language models (LLMs) has created new challenges for MLOps. Organizations are adopting specialized frameworks for LLMOps, with 73% implementing retrieval-augmented generation (RAG) systems to ground model outputs in enterprize knowledge bases. Parameter-efficient fine-tuning techniques like LoRA and QLoRA have reduced the computational cost of adapting foundation models by 85%. The emergence of model distillation techniques has enabled the creation of smaller, more efficient models that retain 95% of the performance of their larger counterparts while being 10x faster to deploy and 5x cheaper to operate.

73% of organizations use RAG for LLM deployments

85% reduction in fine-tuning costs with parameter-efficient methods

10x faster deployment with distilled models

5x lower operating costs for distilled vs. full-size models

MLOps Security & Governance

As ML systems become more pervasive, security and governance have moved to the forefront. Zero-trust security models are now implemented by 81% of enterprizes for their ML infrastructure (up from 32% in 2023). New tools for model explainability and bias detection provide transparency into model decisions, with 78% of organizations now requiring explainability for all production models. The adoption of ML-specific security tools has increased by 145% year-over-year, addressing vulnerabilities like model inversion, membership inference, and prompt injection attacks in LLM applications.

81% of enterprizes implement zero-trust for ML infrastructure

78% require explainability for production models

145% YoY increase in ML security tool adoption

67% of organizations now have dedicated ML security teams

The Future of MLOps

Looking ahead, several trends are shaping the future of MLOps. The convergence of MLOps, LLMOps, and traditional DevOps is creating unified AI platforms that streamline the entire ML lifecycle. AutoML 3.0 is automating more aspects of model development, while causal ML is providing deeper insights into model behavior. The rize of federated learning and privacy-preserving ML is enabling secure collaboration across organizations without sharing raw data. As AI regulations evolve, tools for automated compliance and audit trails are becoming standard components of the MLOps stack, ensuring responsible AI deployment at scale.

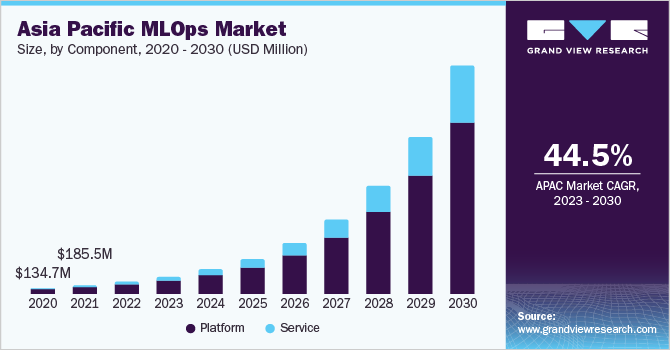

Future Trends in MLOps 2025-2030Source: Grandview Research 2025

AutoML 3.0 reduces model development time by 75%

Federated learning adoption grew 3x in 2024-2025

90% of enterprizes will have AI governance frameworks by 2026

70% of ML pipelines will be fully automated by 2026

References and Further Reading

For those interested in diving deeper into MLOps best practices and emerging trends, the following resources provide valuable insights and practical guidance.

Google Cloud. (2025). MLOps: Continuous Delivery for Machine Learning.

Microsoft Research. (2025). The State of MLOps: Challenges and Opportunities.

Stanford University. (2025). ML Systems Design: Best Practices for 2025.

AWS AI/ML Blog. (2025). Building Enterprize-Grade ML Systems.

MLOps Community. (2025). Annual MLOps Survey Results.

Kubeflow. (2025). Production ML with Kubernetes: Advanced Patterns.

Databricks. (2025). The Big Book of MLOps.

O'Reilly. (2025). MLOps Engineering at Scale.

Topics

Start Your AI Journey Today

Ready to transform your business with cutting-edge AI solutions? Contact our team of experts to discuss your project.

Schedule a Consultation